Infosec Underpinnings

Infosec Underpinnings

I’ve been lucky enough to help build security teams from the ground up at a few different companies. Each iteration had to reckon with questions of a security teams purpose, organization, and how to measure success.

Initially these questions struck me as unproductively abstract, but over time I’ve found that the answers to them matter greatly because they influence what you can accomplish, how quickly and how well.

We will cover measuring success in a later article and take on the purpose & organization of Infosec teams.

- Purpose = what does success look like

- Organization = ways to partition the problem space.

Purpose

To answer the existential question of information security you could do worse than going with the Wikipedia definition of risk management.

Risk management is the identification, assessment, and prioritization of risks followed by coordinated and economical application of resources to minimize, monitor, and control the probability and/or impact of unfortunate events or to maximize the realization of opportunities.

Most Infosec teams work within this definition, scoped to the technology arena. Every vuln in the codebase we find and fix, every server we keep patched and every employee we train not to fall for phishing attacks decrements our cosmic “likelihood to get hacked” counter.

“Don’t get hacked” is our main goal and reducing risk is our means of accomplishing that. Risk reduction is well and good and the lens through which most people think about security efforts. But there is another way: Security as enablement.

Security as enablement

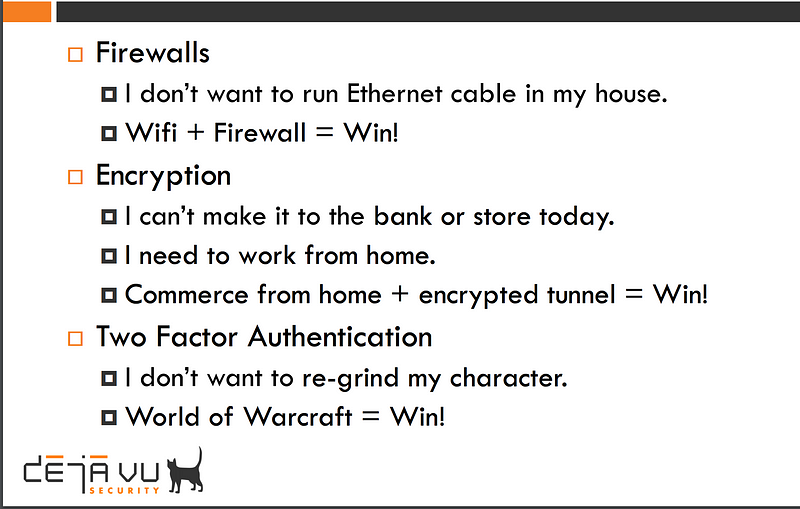

Security as enablement means reducing the risk to a point that totally new things become possible.

For example Netflix recently launched offline viewing. Ensuring that customers couldn’t trivially download and store the content took security work which allowed this feature to exist. Security enablement.

A movie studio transferring footage in realtime from the field because its safely encrypted would be another.

Security as enablement is relevant inwardly-facing as well. Running an SSO portal means that your employees do not need to remember 20 different passwords. A secure by default framework makes a tricky area (like parsing urls) easier and more secure. Some of these projects are bundling the sweet (new capabilities for the business) with the sour (security) but others are just straight wins for everyone involved.

The security community collectively have had our biggest successes when working on projects that enable.

Pillars of purpose

A security team exists to understand, judge and act upon risk.

Security as enablement or simple risk reduction boils down to the same pool of work either way, but prioritized slightly differently. I see three main parts.

Understand

The first job of an Infosec team is to know of the vast universe of possible risks that could befall them. In other words the fullest set of bad things that could happen.

Examples:

- SQL injection in your product

- Not patching SSL after heartbleed

- An employee walking out the door with a laptop

This understanding comes from the aggregate knowledge of the team itself and is why diversity of experience is desirable.

The other half is knowing the full set of work happening in your company that could have security implications. Visibility, or “the field of things going on” are the raw materials into your security team to take a look at. For example, that new project that is launching, that new acquisition, that new datacenter, that new remote office practice of setting all passwords to be $username + 1234. If you don’t know about it, you cannot secure it

Omniscience is the unattainable goal to strive for here, but a middle ground of being looped in to all relevant goings-on at the company is quite possible. I’ve found the a general opinion of the security team is the main lever of how easy or hard this is. Don’t be a jerk and inhibit progress and this type of awareness should flow easily to you.

Judge

While the potential risks are vast they are also tractable. It is your job is to weigh which of them are most likely, most damaging and most urgent. Are you more likely to lose your AWS keys to an awesome application security vulnerability or forget to wipe hard drives before IT sells them on Ebay?

The specifics of your company come in to play a lot here. Myspace survived the samy worm but the 40+ bitcoin companies couldn’t weather having their bitcoin wallets stolen. Different vulnerabilities bubble up to different outcomes which have different effects on different companies. Even for technology companies the technology != the business. Targets stock didn’t really dip when they got hacked but did from their disastrous Canadian expansion. Business are more resilient than many of us security people may think.

Globally true advice on how to best judge risk is a fools errand but a few examples of situations where we earn our paycheck with judging risk:

- Growth wants to set every new users password to “foo” so its easy to remember and more people sign up. Is this the right tradeoff?

- We found 2 RCE bugs and 40 XSS issues in the last year. What proportion of time do we invest in globally exterminating each given the severity of RCE> XSS?

- Built tools to curb potential internal abuse vs investing time finding externally-facing vulnerabilities?

- Do we shut the site down in the nightmare scenario where user data is being exfiltrated?

- Do we invest more time in finding the next 10 security problems or a training class for engineers to prevent future problems?

Answering these questions is really the meat of our jobs. Its where we can actually pin down our ultimately good or bad decisions.

Act

The obvious last step: Do something about it. Some security teams do not have the mandate to go out and fix things themselves. This makes me sad. At my current job we try to fix around 10% of the code level vulns that we find ourselves. Beyond fixing the problem it builds empathy on both sides and better understanding of the codebase.

Sometimes you have multiple ways to act — if your codebase is riddled with sql injection you could fix it yourself, ban future vulnerable code from being checked in, mandate a security training class for engineers. Here are some ways to act.

NIST organizes the prime actions as: Identification, Protection, Detection, Response and Recovery which feels reasonable to me. I haven’t thought about it enough to really know where I stand here though.

Organization

If you get hacked, no one outside your company cares how. Really.

No one cares it was “another teams responsibility” or if the method was clever or clumsy. You could have the worlds most secure codebase but a weak employee password on an internet-facing production system still sinks you. This means you need to cover all your bases. So what are your bases?

I see them as:

- Code

- Systems

- People

Code — these are security flaws in your product or in software you rely on to run the company.

Systems — this is having an unpatched instance of JIRA pointed at the Internet or (god forbid) unpatched Wordpress with sensitive data in it. This is securely partitioning corp and prod.

People — this is the catchall but encompasses anything a employee or user can do to increase risk to the company or one another. This is phishing, this is leaving the unreleased iPhone prototype at a bar. This is user-interaction required copy/paste self-xss on Facebook via javascript: links. This is your users spamming gold-selling services on world of warcraft that are actually malware.

These areas don’t always map to teams but all 3 likely need to be accounted for at any modern company. An Infosec team is responsible for both the security of the company itself and the products the company produces across all of these domains.

That last scenario is worth highlighting, you are not only responsible for yourself, but how your users act to one another and how they could harm one another. Facebook has a whole team to fight spam, Uber has a whole team to ensure the inside of an Uber is the safest physical place you could be. Morally I believe this is as it should be, companies have a responsibility to their users to provide a safe environment.

Conclusion

Security is hard. Orienting and allocating effort around the most important areas with imperfect information is also hard. This is more of a “here is how I think about things” than a prescriptive article but a few conclusions I’ve found to be true everywhere I’ve worked they would be:

- Doing it right requires a systems approach. Solving the “leaving a phone in a bar” problem is comprised of MDM, encrypting the data on the phone, new employee training, a culture that wouldn’t punish someone for admitting they lost the phone and a well-staffed response team.

- Security teams, don’t just dictate the path, walk it alongside the rest of the company. Sniff out places you can build your way to security instead of critiquing your way towards it.

- Ruthlessly prioritize. Building libraries, good training and a solid culture are among the highest leverage things a security team can do.

Engineering is about tradeoffs. Security engineering is about tradeoffs. Running a security team is about tradeoffs. May you choose the correct ones.