Product security primitives

Product security primitives

Working in software security for a while I’ve recognized a few core ideas that have helped guide the efforts of a product security team. I want to share these primitives and the opinions built upon them as essentially how I think about product security.

All of these ideas are seen through the lens of product/application security but may apply to other aspects of information security as well.

Purpose

Taking a simplified view companies hire a security team to prevent them from being hacked. Product security is responsible for the portion of that goal surrounding the security of the codebase. This is largely done through finding, fixing and preventing security vulnerabilities (“bugs”).

All security efforts are inherently asymmetric. The defenders have to win everywhere, every day but an attacker only has to win once.

Put together these two truths suggests that the ultimate goal is to eliminate, and forever prevent, all security flaws in the codebase. Lets call this goal “perfect security”. It is unquestionably an aspirational goal but for definitions of purpose it feels like a good target to aim for.

What is bug (baby dont hurt me)

Everything in product security revolves around bugs. Bugs are really a proxy for “insecurity” and perhaps more accurately a “observed example of insecurity”.

Every bug is a story and a lesson. The story describes a failure of some kind that can teach us something. Taken in aggregate security bugs localized to a feature, a framework, a pattern, a team or a codebase are big blinking arrows saying “look here”.

Bug fates

I believe the fate of a security bug is what we really care about. This is the core idea that guides lots of work.

Myspace had many XSS bugs before (and after) the samy worm but the difference was how the bug affected the company and its users. Simply tallying the raw number of XSS bugs wouldn’t reflected the impact of Samy’s finding.

Since quantity and severity don’t fully capture the impact we need to describe the outcomes.

These outcomes can be broadly categorized as:

- Exploited — This bug was used for harm

- Unfound — The bug still lurks, unfound by us or others

- Found — We become aware of this issue and fixed it

- Found outside

- Found by security team in code

- Found by security team pre-code - Prevented — A bug that is unlikely to happen again

- Mitigated

- Prevented

Exploited is the worst outcome for a bug and prevented is the best. Because it needs a name we can call this idea “outcome buckets”. I feel like many of us security practitioners think of things this way. Lets look at each outcome in detail

Exploited — This is bad. This is Gmail being hacked by China, this is the Tunisian government finding dissidents via lack of https. For more examples OWASP has a corpus of a decade of security flaws some of which were exploited.

Unfound — Unfound by anyone is not a good state as it is essentially a race between your internal security team and the entire world. Unfortunately if you are unaware of a lurking security flaw there is nothing you can do about it, a core part of why security is hard. Thus a primary goal for a product security team is to excavate security bugs turning them from unfound to found. Basically for bugs in this bucket there is only one action you can ever take, find the bug.

Found — There is a lot of range in this bucket and I believe sub-buckets inside it.

- Pre-code — before the insecure idea or design becomes code

- Code — Found as the software is being written or before its shipped outside the company to the greater world.

- Live/Externally — Found once the software is live often by someone outside the company like when through a bug bounty program

While finding is always good, finding it in earlier stages of the software development lifecycle is better because as the bug is cheaper to fix, safer for users and easier for an engineering team. This is the essence of the SDLC.

So our goal when finding a bug is considering how we could have found it earlier in the development process while accepting the reality that software is built in stages and as such security involvement will differ by stage. Design reviews, more or better security audits, external audits, static analysis and other tooling all apply to this bucket.

Prevented — Preventing a bug is the best possible outcome. The canonical example is sql injection being prevented by parameterized queries but I like to take it further and believe truly preventing a class of bugs includes:

- Library/framework support, like an ORM or parameterized queries

- Enforcement/safeguards in the form of commit hooks or tooling to enforce the secure patterns and surface non-blessed ways of doing things to the security team.

- Training & Culture to explain and emphasize what the secure path is and why taking it is important.

Prevention encompasses two separate ideas: mitigation and prevention. Mitigation I see as reducing the damage if an issues does occur while prevention would be reducing the likelihood of an issue ever happening in the first place.

Bug success

This is pretty abstract stuff, lets look at an example.

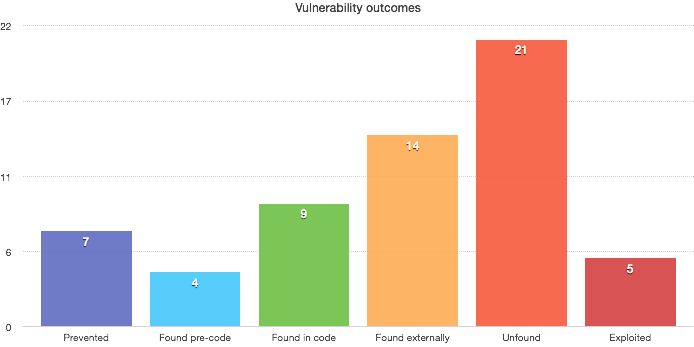

Imagine we have perfect knowledge of all the bugs in existence for a month of security work. The breakdown might look like this:

From this our goal is clear — move the maximum number of bug outcomes in the direction of “prevented”. Anytime we can find a bug before someone outside the company its a win, anytime we can find a security bug at all before its exploited for real harm is a win, etc.

The primary goal of a security team is to move every bug outcome in to prevented. This is our “perfect security” defined earlier.

We can view all security efforts through this lens. Static analysis run at checkin time moves a bug that would be found externally to found internally. Design reviews catch bugs before they become code. A bug bounty program reduces the likelihood of bugs in Exploited by providing a pressure release and moves Unfound into the Found category, its a two for one.

Its worth explicitly calling out — most bugs will be found in these latter stages once software is shipped because that is the stage most software spends most of its life in: Shipping and running. This does not mean you are bad at product security.

Bug reality

Like all models this one is imperfect. Key weaknesses:

- Its impossible to measure unfound bugs.

- Its difficult to honestly measure prevented bugs.

- You don’t always know when a bug is exploited.

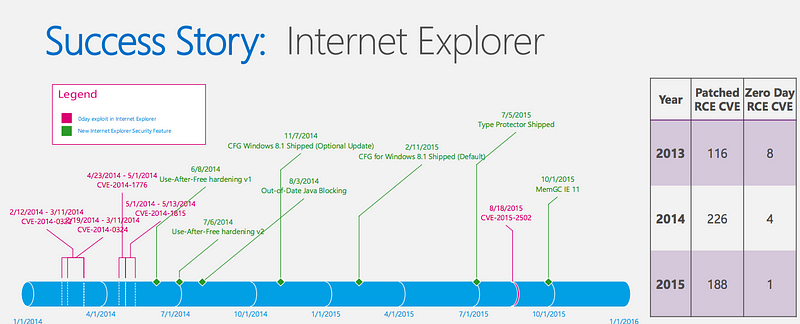

As practitioners the exact outcome we are striving for, preventing a bug from being written in the first place, is really hard to measure. That sucks. The best I’ve found is comparing the before/after to approximate the impact of fixing a class of bugs. The best example of this I have seen is this work from the Microsoft security team where you can see the zero day flaws decrease over time due to investment.

My categorization ignores plenty of subtlety, what if a bug is found but unfixed. Prevented but regressed. Unfound but unexplainable, etc. In general though I’ve found it a useful way to view things.

Another big weaknesses is we have been treating all security bugs as equal to one another, which is obviously wrong. The most accurate answer is a blog post long but for now know that some bugs are more meaningful than others and CVSS with an emphasis on the environmental factors is a decent approximation of importance.

Opinions

If we accept all these primitives then I will share a few opinions that have guided how I’ve invested effort among the security teams I’ve lead in the past. These is all about optimizing the effort expended for maximum “security” in return.

Opinion — Shifting bugs from a less to more favorable outcome bucket is always good, but some jumps are more impactful than others. Known -> prevented is the biggest win possible. Wiping out XSS with CSP in block mode is the rare tangible, easily explainable, clear-cut security work

Opinion — Unknown -> Known is huge. All security work relies on a base layer of awareness and visibility into what is going on at the company. Awareness project X is shipping, awareness a known shaky team is actively working on a sensitive codebase, awareness or what is going is a prerequisite to prioritizing what to look at and diving deeper into concerning areas. It might not feel like “real work” to meet with PMs and managers to ensure you are aware of their roadmap but it is.

Opinion — Keep a balance of finding and fixing. Product security is unique in that we essentially make our own work. If we look harder for flaws it generates projects we could complete to then prevent those flaws. Keep in mind a balance between finding more work and completing what is already on your plate

Opinion — Measurement. Meaningful metrics are very hard. The two most useful over time to me have been:

- % of “really bad” bugs vs total security bugs

- Classes of bugs going down with investment to prevent them

There are many ways to twist metrics and in security its especially hard, Are more bugs a good thing or a bad thing? If its turning unfound into found then its good. If its simply writing more bugs overall its bad. Explaining a security team’s work to higherups can be hard, accentuating the positive, or the security as enablement, has worked for me at times.

Some good metrics for a product security team itself:

- Inbound vs outbound-initiated security work

- Engineer-hours saved with a new library or framework

- How long critical bugs were live before fix

Opinion —As a group of primarily engineers we discount the usefulness of nontechnical solutions. Socializing the impact of a bug across the company is a powerful and underused tool to improve security. Its like a seasoning, don’t overdo it but I believe most orgs are not doing it enough. I was surprised that one of the most powerful things I’ve done for security is compiling and sharing a pseudo-monthly newsletter of interesting, exciting or representative security bugs we have found in our travels through the codebase. It gets the company talking, and thinking, about security.

Conclusion

There is a lot of Fear, Uncertainty and Doubt when it comes to security. This FUD is unfortunate because it obscures, enhances, trivializes or otherwise misrepresents the true risk of a security issues and thus how best to battle and remedy them.

This is a way of thinking about security that has been useful for me but its one of many ways, I wrote it primarily to hear how other people think about this topic. Gathering this understanding of how to approach security optimally means we can work towards higher quality software which will lead to a safer world.

Thanks

Much thanks to Magoo, Jim O’Leary, Hudson, Robert Fly and Dev for talking over these ideas. Be sure to see Jim’s talk about ideas like this at Appsec California 2017.